Troubleshoot universe issues

YugabyteDB Anywhere (YBA) allows you to monitor and troubleshoot issues that arise from universes.

Use metrics

Monitor performance metrics for your universe to ensure the universe configuration matches its performance requirements using the universe Metrics page.

The Metrics page displays graphs representing information on operations, latency, and other parameters accumulated over time. By examining specific metrics, you can diagnose and troubleshoot issues.

You access metrics by navigating to Universes > Universe-Name > Metrics.

For information on the available metrics, refer to Performance metrics.

Use nodes status

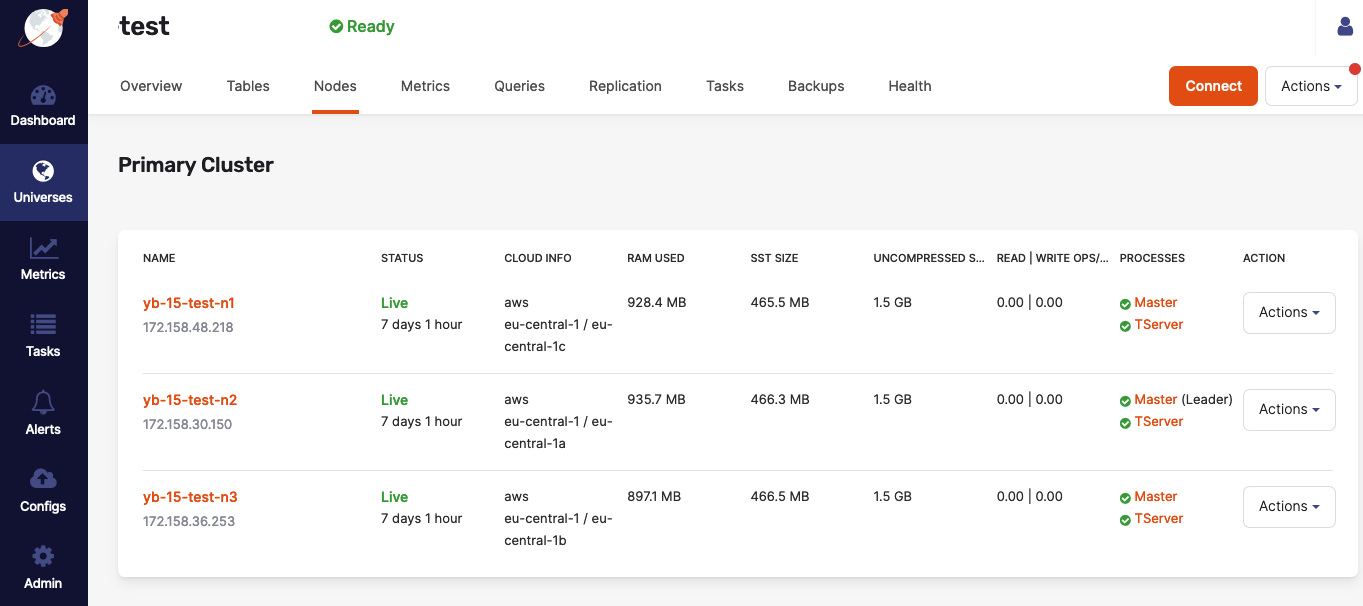

You can check the status of the YB-Master and YB-TServer on each YugabyteDB node by navigating to Universes > Universe-Name > Nodes, as per the following illustration:

If issues arise, additional information about each master and YB-TServer is available on their respective Details pages, or by accessing <node_IP>:7000 for YB-Master servers and <node_IP>:9000 for YB-TServers (unless the configuration of your on-premises data center or cloud-provider account prevents the access, in which case you may consult Check YugabyteDB servers).

Check host resources on the nodes

To check host resources on your YugabyteDB nodes, run the following script, replacing the IP addresses with the IP addresses of your YugabyteDB nodes:

for IP in 10.1.13.150 10.1.13.151 10.1.13.152; \

do echo $IP; \

ssh $IP \

'echo -n "CPUs: ";cat /proc/cpuinfo | grep processor | wc -l; \

echo -n "Mem: ";free -h | grep Mem | tr -s " " | cut -d" " -f 2; \

echo -n "Disk: "; df -h / | grep -v Filesystem'; \

done

The output should look similar to the following:

10.1.12.103

CPUs: 72

Mem: 251G

Disk: /dev/sda2 160G 13G 148G 8% /

10.1.12.104

CPUs: 88

Mem: 251G

Disk: /dev/sda2 208G 22G 187G 11% /

10.1.12.105

CPUs: 88

Mem: 251G

Disk: /dev/sda2 208G 5.1G 203G 3% /

Troubleshoot universe creation

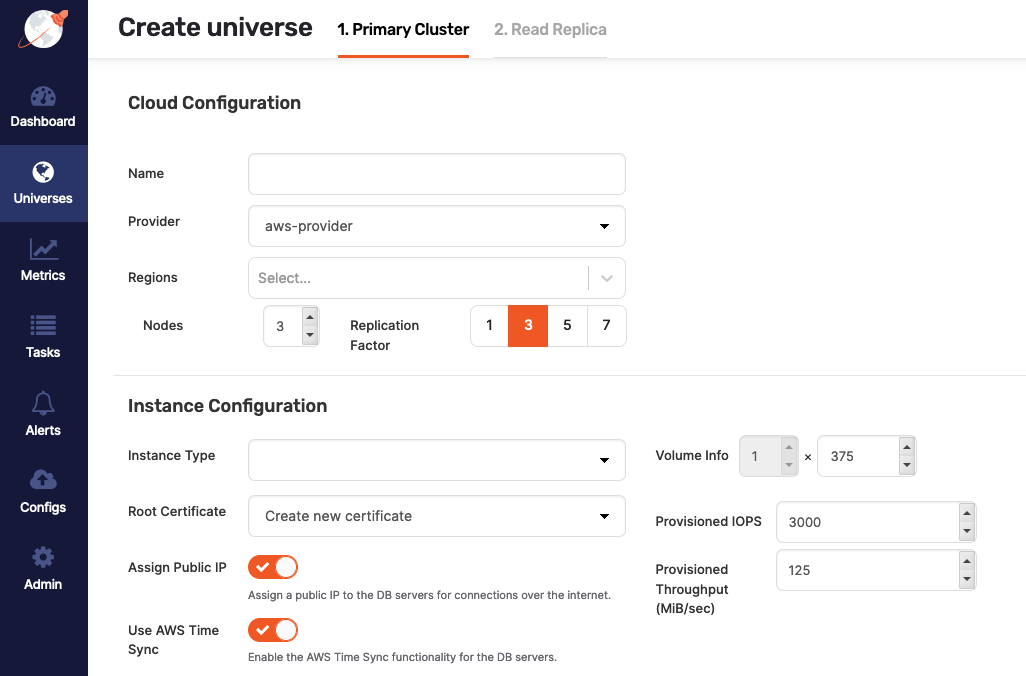

You typically create universes by navigating to Universes > Create universe > Primary cluster, as per the following illustration:

If you disable Assign Public IP during the universe creation, the process may fail under certain conditions, unless you either install the following packages on the machine image or make them available on an accessible package repository:

chrony, if you enabled Use Time Sync for the selected cloud provider.python-minimal, if YugabyteDB Anywhere is installed on Ubuntu 18.04.python-setuptools, if YugabyteDB Anywhere is installed on Ubuntu 18.04.python-sixorpython2-six(the Python2 version of Six).policycoreutils-python, if YugabyteDB Anywhere is installed on Oracle Linux 8.selinux-policymust be on an accessible package repository, if YugabyteDB Anywhere is installed on Oracle Linux 8.locales, if YugabyteDB Anywhere is installed on Ubuntu.

The preceding package requirements are applicable to YugabyteDB Anywhere version 2.13.1.0.

If you are using YugabyteDB Anywhere version 2.12.n.n and disable Use Time Sync during the universe creation, you also need to install the ntpd package.

Use support bundles

A support bundle is an archive generated at a universe level, and also a silent collection of data from each node, even if file collection fails on certain components of nodes. It contains all the files required for diagnosing and troubleshooting a problem. The diagnostic information is provided by the following types of files:

- Application logs from YugabyteDB Anywhere.

- Universe logs, which are the YB-Master and YB-TServer log files from each node in the universe, as well as PostgreSQL logs available under the YB-TServer logs directory.

- Output files (

.out) files generated by the YB-Master and YB-TServer. - Error files (

.err) generated by the YB-Master and YB-TServer. - G-flag configuration files containing the flags set on the universe.

- Instance files that contain the metadata information from the YB-Master and YB-TServer.

- Consensus meta files containing consensus metadata information from the YB-Master and YB-TServer.

- Tablet meta files containing the tablet metadata from the YB-Master and YB-TServer.

The diagnostic information can be analyzed locally or the bundle can be forwarded to the Yugabyte Support team.

The focus is to ensure that support bundle generation is successful in most cases, regardless of any individual file collection failures.

You can create a support bundle as follows:

-

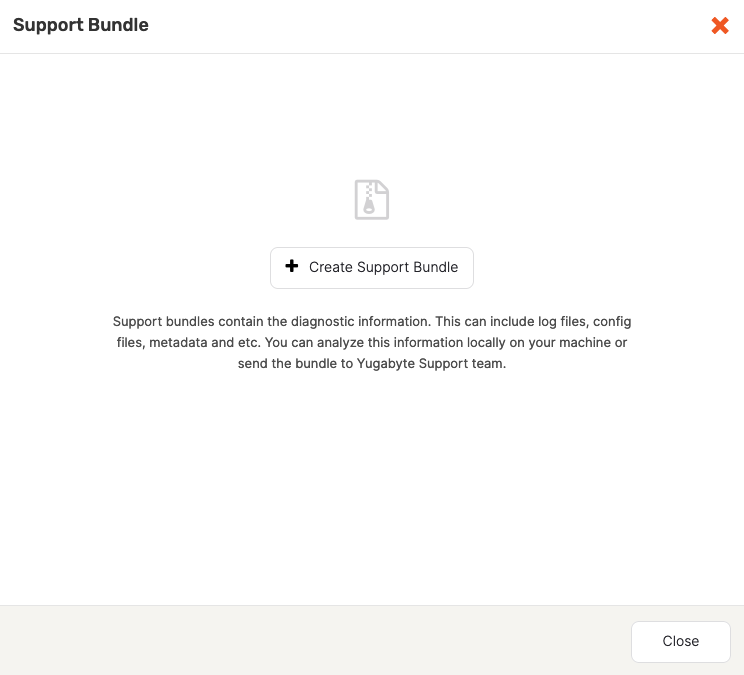

Open the universe that needs to be diagnosed and click Actions > Support Bundles.

-

If the universe already has support bundles, they are displayed by the Support Bundle dialog. If there are no bundles for the universe, use the Support Bundles dialog to generate a bundle by clicking Create Support Bundle, as per the following illustration:

-

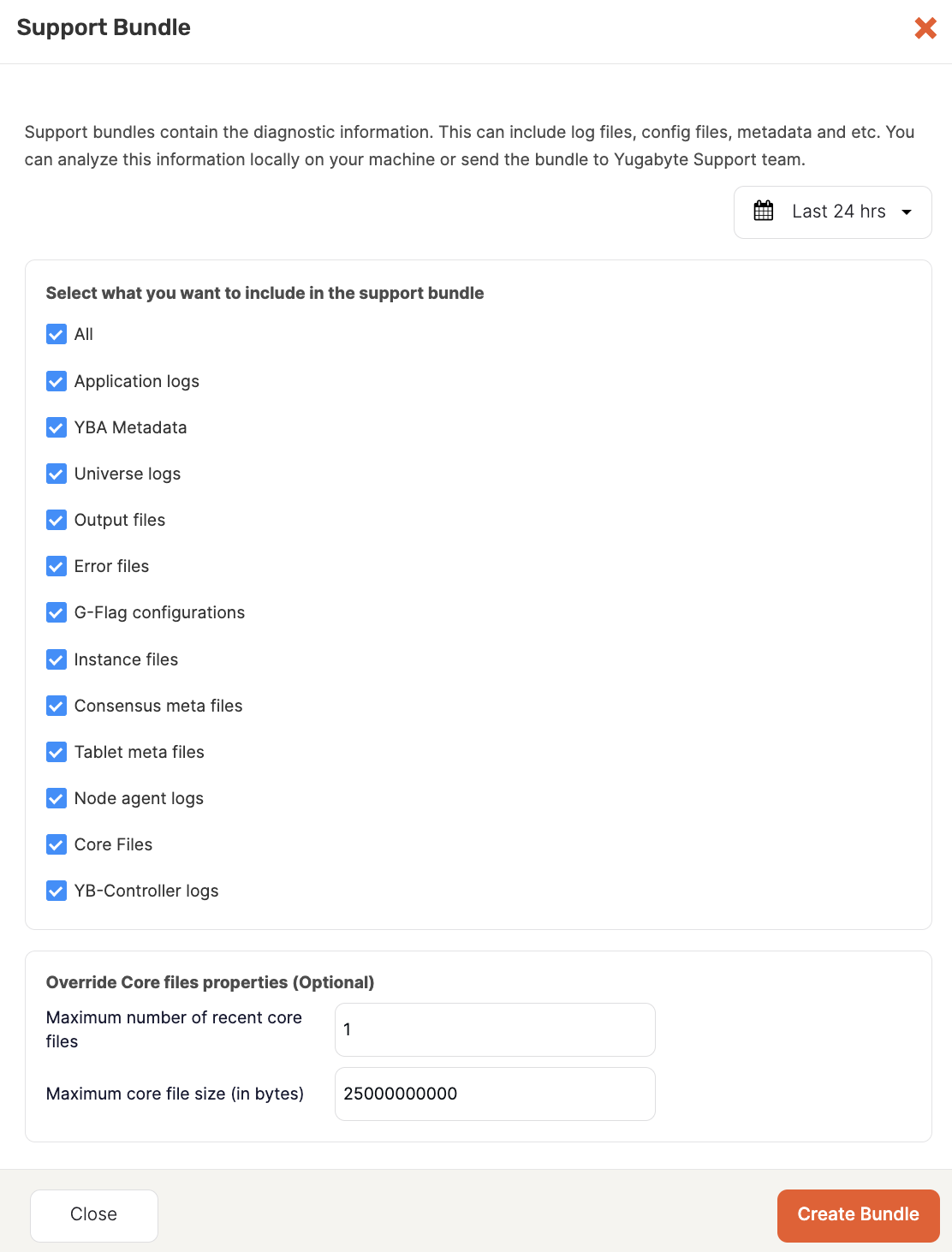

Select the date range and the types of files to be included in the bundle, as per the following illustration:

For information about the components, see Support bundle components.

-

Click Create Bundle.

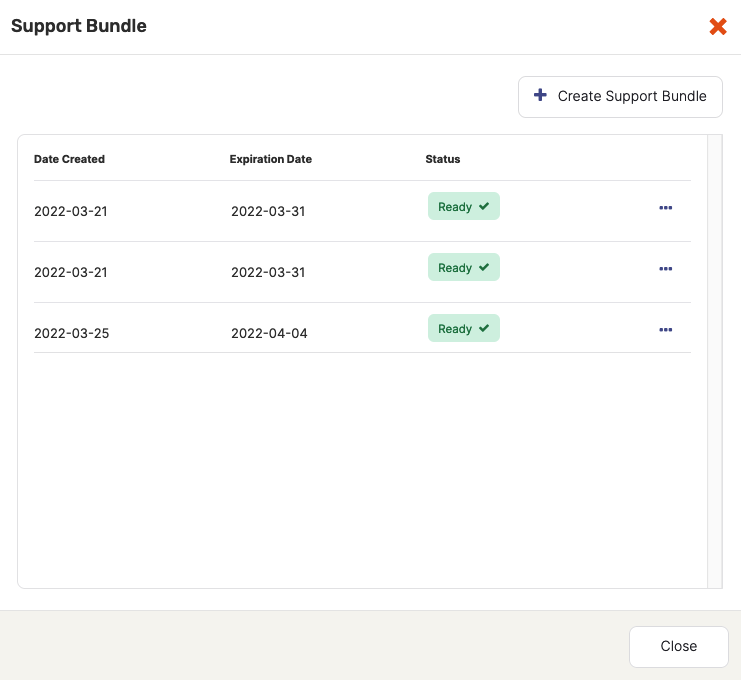

YugabyteDB Anywhere starts collecting files from all the nodes in the cluster into an archive. Note that this process might take several minutes. When finished, the bundle's status is displayed as Ready, as per the following illustration:

The Support Bundles dialog allows you to either download the bundle or delete it if it is no longer needed. By default, bundles expire after ten days to free up space.

Support bundle components

The following sections describe some of the file components of a support bundle.

Application logs

Logs generated by YBA.

YBA Metadata

This component collects a fingerprint of the YBA data. The metadata is collected at a customer level, rather than a global level (like PG_dump) to ensure multi-tenancy is respected going forward. Following are the included metadata sub-components in this phase:

- Customer metadata

- Cloud providers metadata

- Universes metadata

- Users metadata

- Instance_type metadata (sizing info, cores)

- Customer_task table metadata

An example of the YBA metadata directory structure is as follows:

support_bundle/

└── YBA/

└── metadata/

├── customer.json

├── providers.json

├── universes.json

└── users.json

Universe logs

Logs generated by YugabyteDB in the universe.

Output files

The .out files created in the YugabyteDB nodes.

Error files

The .err files created in the YugabyteDB nodes.

G-Flag configurations

Flags set on the universe. It is the server.conf file in the YugabyteDB nodes.

Instance files

Instance file in the /master and /tserver directories of YugabyteDB nodes.

Consensus meta files

Consensus-meta folder in the /master and /tserver directories of YugabyteDB nodes.

Tablet meta files

Tablet-meta folder in the /master and /tserver directories of YugabyteDB nodes.

Node agent logs

Node agent logs generated in the YugabyteDB nodes (if node agent is enabled).

Core Files

The Core Files component collects all the core files and mitigates two issues that may happen when collecting files such as the following:

- Files can be very large (for example, 200GB+)

- There can be a huge number of files (for example, when a crashloop happens)

When you create a support bundle, if you select the Core Files component (by default, the component is selected), there are two optional Core files properties:

-

Maximum number of recent core files: YugabyteDB Anywhere (YBA) collects the most recent "N" number of files. "N" is set to a default value of collecting 1 file.

-

Maximum core file size: This field collects the recent core files only if the core file size is below the specified size limit. It is set to a default value of 25000000000 bytes (25GB).

YBA also provides a runtime flag yb.support_bundle.allow_cores_collection, which is used to globally disable cores collection across any new support bundles generated on the platform. This flag can only be set by the SuperAdmin and is true by default.

YB-Controller logs

YBC logs generated in /controller/logs folder in the YugabyteDB nodes (if YB-Controller is enabled).

Kubernetes info

This component exclusively includes metadata for Kubernetes-based universes. The detailed metadata list is as follows:

Note that YBA will only collect files if you have sufficient permissions to request the information. Otherwise, the file collection process will be skipped.

-

Cluster-wide information:

- Kubectl version

- Service account permissions

- Provider storage class info

-

Information collected in the YBA namespace:

- Events.yaml

- Pods.yaml

- Configmaps.yaml

- Services.yaml

-

Information collected from each YugabyteDB namespace in the cluster:

- Helm overrides used

- Events.yaml (Collect specific columns, sorted by time)

- Pods.yaml

- Configmaps.yaml

- Services.yaml

- Secrets.txt (Includes only secret names and not the actual value)

- Statefulsets.yaml

- Persistentvolumeclaims.yaml

Debug crashing YugabyteDB pods in Kubernetes

If the YugabyteDB pods of your universe are crashing, you can debug them with the help of following instructions.

Collect core dumps in Kubernetes environments

When dealing with Kubernetes-based installations of YugabyteDB Anywhere, you might need to retrieve core dump files in case of a crash in the Kubernetes pod. For more information, see Specify ulimit and remember the location of core dumps.

The process of collecting core dumps depends on the value of the sysctl kernel.core_pattern, which you can inspect in a Kubernetes pod or node by executing the following command:

cat /proc/sys/kernel/core_pattern

The value of core_pattern can be a literal path or it can contain a pipe symbol:

-

If the value of

core_patternis a literal path of the form/var/tmp/core.%p, cores are copied by the YugabyteDB node to a persistent volume directory that you can inspect using the following command:kubectl exec -it -n <namespace> <pod_name> -c yb-cleanup -- ls -lht /var/yugabyte/coresIn the preceding command, the

yb-cleanupcontainer of the node is used because the primary YB-Master or YB-TServer container may be in a crash loop.To copy a specific core dump file at this location, use the following kubectl

cpcommand:kubectl cp -n <namespace> -c yb-cleanup <yb_pod_name>:/var/yugabyte/cores/core.2334 /tmp/core.2334 -

If the value of

core_patterncontains a|pipe symbol (for example,|/usr/share/apport/apport -p%p -s%s -c%c -d%d -P%P -u%u -g%g -- %E), the core dump is being redirected to a specific collector on the underlying Kubernetes node, with the location depending on the exact collector. In this case, it is your responsibility to identify the location to which these files are written and retrieve them.

Use debug hooks with YugabyteDB in Kubernetes

You can add your own commands to pre- and post-debug hooks to troubleshoot crashing YB-Master or YB-TServer pods. These commands are run before the database process starts and after the database process terminates or crashes.

For example, to modify the debug hooks of a YB-Master, run following command:

kubectl edit configmap -n <namespace> ybuni1-asia-south1-a-lbrl-master-hooks

This opens the configmap YAML in your editor.

To add multiple commands to the pre-debug hook of yb-master-0, you can modify the yb-master-0-pre_debug_hook.sh key as follows:

apiVersion: v1

kind: ConfigMap

metadata:

name: ybuni1-asia-south1-a-lbrl-master-hooks

data:

yb-master-0-post_debug_hook.sh: 'echo ''hello-from-post'' '

yb-master-0-pre_debug_hook.sh: |

echo "Running the pre hook"

du -sh /mnt/disk0/yb-data/

sleep 5m

# other commands here…

yb-master-1-post_debug_hook.sh: 'echo ''hello-from-post'' '

yb-master-1-pre_debug_hook.sh: 'echo ''hello-from-pre'' '

After you save the file, the updated commands will be executed on the next restart of yb-master-0.

You can run the following command to check the output of your debug hook:

kubectl logs -n <namespace> ybuni1-asia-south1-a-lbrl-yb-master-0 -c yb-master

Expect an output similar to the following:

...

2023-03-29 06:40:09,553 [INFO] k8s_parent.py: Executing operation: ybuni1-asia-south1-a-lbrl-yb-master-0_pre_debug_hook filepath: /opt/debug_hooks_config/yb-master-0-pre_debug_hook.sh

2023-03-29 06:45:09,627 [INFO] k8s_parent.py: Output from hook b'Running the pre hook\n44M\t/mnt/disk0/yb-data/\n'

Perform the follower lag check during upgrades

You can use the follower lag check to ensure that the YB-Master and YB-TServer process is caught up to its peers. To find this metric on Prometheus, execute the following:

max by (instance) (follower_lag_ms{instance='<ip>:<http_port>'})

- ip represents the YB-Master IP or the YB-TServer IP.

- http_port represents the HTTP port on which the YB-Master or YB-TServer is listening. The YB-Master default port is 7000 and the YB-TServer default port is 9000.

The result is the maximum follower lag, in milliseconds, of the most recent Prometheus of the specified YB-Master or YB-TServer process.

Typically, the maximum follower lag of a healthy universe is a few seconds at most. The following reasons may contribute to a significant increase in the follower lag, potentially reaching several minutes:

- Node issues, such as network problems between nodes, an unhealthy state of nodes, or inability of the node's YB-Master or YB-TServer process to properly serve requests. The lag usually persists until the issue is resolved.

- Issues during a rolling upgrade, when the YB-Master or YB-TServer process is stopped, upgrade on the associated process is performed, then the process is restarted. During the downtime, writes to the database continue to occur, but the associated YB-Master or YB-TServer are left behind. The lag gradually decreases after the YB-Master or YB-TServer has restarted and can serve requests again. However, if an upgrade is performed on a universe that is not in a healthy state to begin with (for example, a node is down or is experiencing an unexpected problem), a failure is likely to occur due to the follower lag threshold not being reached in the specified timeframe after the processes have restarted. Note that the default value for the follower lag threshold is 1 minute and the overall time allocated for the process to catch up is 15 minutes. To remedy the situation, perform the following:

- Bring the node back to a healthy state by stopping and restarting the node, or removing it and adding a new one.

- Ensure that the YB-Master and YB-TServer processes are running correctly on the node.

Run pre-checks before edit and upgrade operations

Before running most edit and upgrade operations, YBA applies the following pre-checks to the universe to ensure that operations do not leave the universe in an unhealthy state.

(Rolling operations) Under-replicated tablets

Symptom (An approximate sample error message)

CheckUnderReplicatedTablets, timing out after retrying 20 times for a duration of 10005ms,

greater than max time out of 10000ms. Under-replicated tablet size: 3. Failing....

Details

During upgrades, YBA will not proceed if any tablets have fewer than the desired copies of tablets (typically the same as the overall replication factor (RF)).

Possible action/workaround

- Fix the root cause of under-replication if it is due to certain YB-TServers being down.

- Increase the timeout that YBA waits for under-replication to clear by increasing the value for the runtime configuration flag

yb.checks.under_replicated_tablets.timeout. - If temporary unavailability during the upgrade is acceptable, disable this check briefly by turning off the runtime configuration flag

yb.checks.under_replicated_tablets.enabledfor the universe.

(Rolling operations) Are nodes safe to take down?

Symptom (An approximate sample error message)

Nodes are not safe to take down:

TSERVERS: [10.1.1.1] have a problem: Server[YB Master - 10.1.1.1:7100] ILLEGAL_STATE[code 9]: 3 tablet(s) would be under-replicated.

Example: tablet c8bf6d0092004dee91c1df80e9f4223a would be under-replicated by 1 replicas

Details

During upgrades, YBA will not proceed if any tablets or YB-Masters have fewer than the desired copies of tablets (typically the same as the overall replication factor (RF)).

Possible action/workaround

- Fix the root cause of under-replication if it is due to certain YB-Masters or YB-TServers being down.

- Increase the timeout that YBA waits for under-replication to clear by increasing the value for the runtime configuration flag

yb.checks.nodes_safe_to_take_down.timeout. - Allow for YB-TServers to lag more than the default compared to their peers to be considered healthy by changing the runtime configuration flag

yb.checks.follower_lag.max_threshold. - If temporary unavailability during the rolling operation is acceptable, disable this check briefly by turning off the runtime configuration flag

yb.checks.nodes_safe_to_take_down.enabledfor the universe.

Leaderless tablets detected on the universe

Symptom (An approximate sample error message)

There are leaderless tablets: [b8a3e62d6868490abca0aba82e3477d7]

Details

YBA verifies that the universe is in a healthy state before starting operations. If any tablets do not have leaders, this check prevents further progress.

Possible action/workaround

- Fix the root cause of certain tablets having no leaders. You may need to contact Yugabyte Support.

- If the situation is temporary, you can raise the timeout for this check using the runtime configuration flag

yb.checks.leaderless_tablets.timeout. - If the universe is in an unhealthy state and you are comfortable with the risk, turn off the check using the runtime configuration flag

yb.checks.leaderless_tablets.enabled.

Cluster consistency check

Symptom (An approximate sample error message)

Unexpected TSERVER: 10.1.1.1, node with such ip is not present in cluster

Unexpected MASTER:, 10.1.1.1 node yb-node-1 is not marked as MASTER

Details

YBA verifies that the configuration of deployed YB-Masters and YB-TServers matches the YBA metadata (universe_details_json). In general, any discrepancy may indicate that some operations were performed on the YB-Masters/YB-TServers without YBA's knowledge and may need to be reconciled with the YBA metadata.

Possible action/workaround

- Fix the root cause of the inconsistency. You may need to contact Yugabyte Support.

- If the inconsistency was verified to be harmless, you can turn off the check using the runtime configuration flag

yb.task.verify_cluster_state. Exercise caution before proceeding with such an inconsistency as it can have serious consequences.

Follower lag check

Symptom (An approximate sample error message)

CheckFollowerLag, timing out after retrying 10 times for a duration of 10000ms

Details

After a node is restarted as part of a rolling operation, YBA verifies that the YB-Masters and YB-TServers catch up to their peers. If this does not happen in a specified duration, YBA aborts the rolling operation.

Possible action/workaround

- Fix any unhealthy nodes in the cluster or problematic network conditions that prevent the node from catching up to its peers.

- Allow a restarted node more time to catch up to its peers by increasing the timeout for the runtime configuration flag

yb.checks.follower_lag.timeout. - If temporary unavailability is acceptable, disable this check briefly by turning off the runtime configuration flag

yb.checks.follower_lag.enabled.